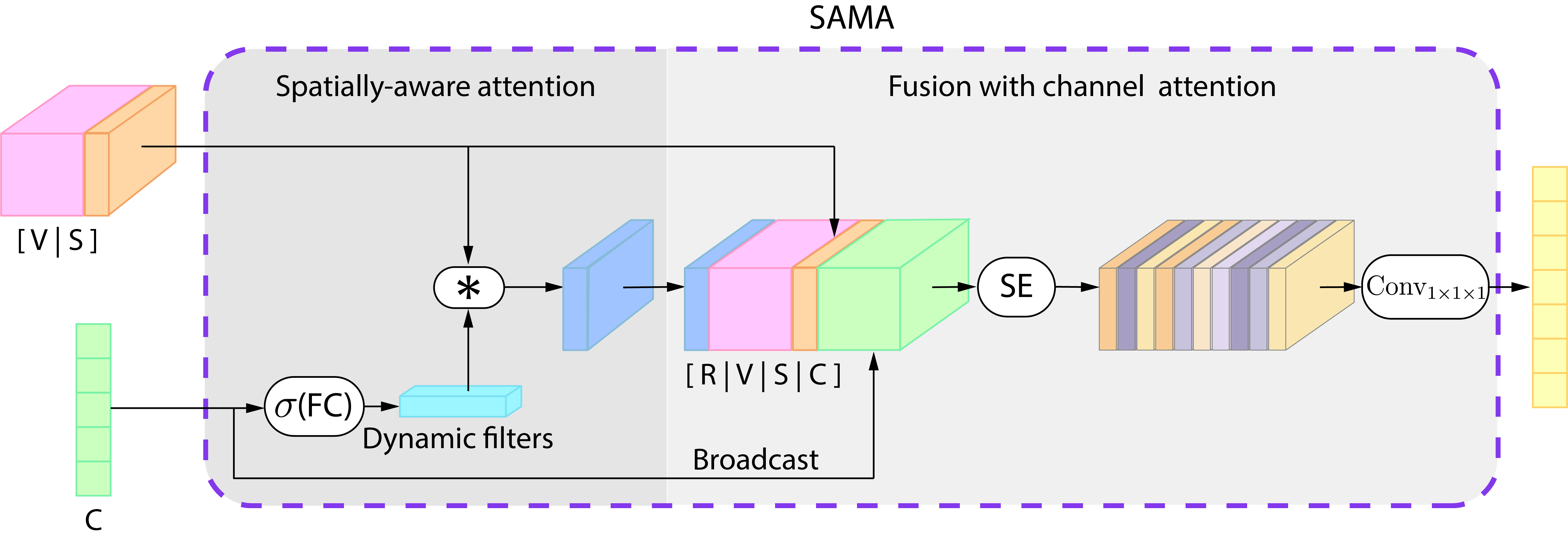

SAMA: Spatially-Aware Multimodal Network with Attention

Lung cancer is the deadliest cancer worldwide. This fact has led to increased development of medical and computational methods to improve early diagnosis, aiming at reducing its fatality rate. Radiologists conduct lung cancer screening and diagnosis by localizing and characterizing pathologies. Therefore, there is an inherent relationship between visual clinical findings and spatial location in the images. However, in previous work, this spatial relationship between multimodal data has not been exploited. In this work, we propose a Spatially-Aware Multimodal Network with Attention (SAMA) for early lung cancer diagnosis. Our approach takes advantage of the spatial relationship between visual and clinical information, emulating the diagnostic process of the specialist. Specifically, we propose a multimodal fusion module composed of dynamic filtering of visual features with clinical data followed by a channel attention mechanism. We provide empirical evidence of the potential of SAMA to integrate spatially visual and clinical information. Our method outperforms by 14.3% the state-of-the-art method in the LUng CAncer Screening with Multimodal Biomarkers Dataset.